by Ella McPherson

The ‘data walkshop,’ led by Alison Powell, of the LSE’s Media and Communications Department, and Alex Taylor, of Microsoft Research, was a wonderful way to kick off our year of Ethics of Big Data events. As Alison explained, she modelled the concept of the walkshop after Laura Forlano’s flash mob ethnography approach. Our remit was to wander the streets, collaboratively spotting data (our instructions purposefully left ‘data’ and ‘big data’ undefined). Through exploring environments new to us with an ethnographic eye, the idea was that we would naturally begin to reflect on our understandings of data and how data is constructed and controlled. We also wanted the process to highlight that underpinning any considerations of the ethics of big data are particular understandings of what data is in the first place.

Our participants were a mix of academics, residents, and members of government interested in data and cities. Our location was not accidental; Tenison Road, on which the Microsoft building where we gathered is located, is the subject of a research project Alex is involved with on the lived experience of data for local residents and workers. Our assignment was to pay special attention to data rich areas, to data calm areas, and to places and moments when data and bodies meet.

Without further ado, we were off into the autumnal air in three groups: one towards the Cambridge railway station, one towards the new developments to the left of the station (if you are facing it), and one down Tenison Road. Volunteers were enlisted in each group to photograph, map, and take ethnographic notes.

I was part of the group that wandered, at a snail’s pace, down Tenison Road, as we each frequently stopped, hailing the group into a huddle, to examine and analyse data we encountered – and even to interrogate individuals who were working with data in the environment.

Our first stop was the Microsoft building on the corner of Tenison Road and Station Road. Many of us had cycled to the seminar and had our hopes raised and dashed by the half-empty cycle racks right outside, clearly labelled ‘Microsoft employee bicycles only. Unauthorised bicycles will be removed.’  Scanning the surroundings, there was no clear indication of how this would be enforced, yet knowing the business of the building beside us, many of us confessed to being scared off due to the possibility that a sophisticated, automated, and invisible monitoring bicycle system was in place. This led to a discussion about the power inherent to the ownership and control of data collection infrastructure and of the data itself – and how the mere suggestion of these is enough to bend the behaviour of those without control to the benefit of those who have it. As we snooped around and photographed the building, one member framed our actions as surveilling back, or sousveillance. She did this in a worried tone, as she had recently been questioned by the police for photographing a power plant.

Scanning the surroundings, there was no clear indication of how this would be enforced, yet knowing the business of the building beside us, many of us confessed to being scared off due to the possibility that a sophisticated, automated, and invisible monitoring bicycle system was in place. This led to a discussion about the power inherent to the ownership and control of data collection infrastructure and of the data itself – and how the mere suggestion of these is enough to bend the behaviour of those without control to the benefit of those who have it. As we snooped around and photographed the building, one member framed our actions as surveilling back, or sousveillance. She did this in a worried tone, as she had recently been questioned by the police for photographing a power plant.

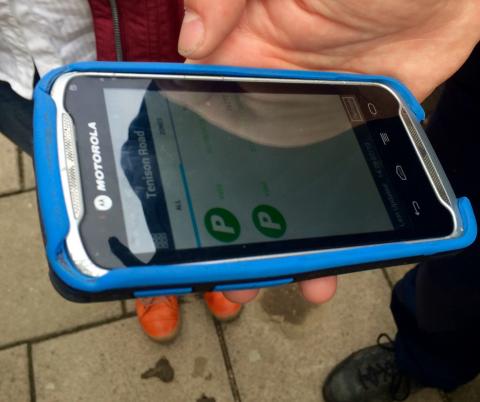

Across the street, we noticed a Pay-and-Display parking machine with a sign on it explaining how to pay via a mobile app called RingGo. We discussed how several of us had done this because of the convenience of not having to dig around under the car seats for spare change – but wondered, as in many convenience-for-data arrangements of contemporary life – what we were giving up by doing so. Luckily, a Civil Enforcement Officer happened to walk down the street at that moment, so we pounced at the chance to ask him!

He showed us his phone, which had his own version of the app (the system is the product of a private company), and said that he gets the registration numbers and the parking expiration time, but that he still has to walk the streets to check if cars are still parked past their deadlines. We asked about how else technology comes into his job, and he told us that he also monitors the bus lane CCTV cameras. These photograph every vehicle in the bus lane that are not cars, but he has to then screen these for actual violations of the bus lane law; for example, taxis are exempt, and he won’t issue tickets in cases of understandable violations, like when a car moves into the bus lane to allow an ambulance to pass. This led us to reflect on our assignment to search for the intersection of data and bodies, in that these were clear cases where automated data was only useful once subjected to human judgement.

He showed us his phone, which had his own version of the app (the system is the product of a private company), and said that he gets the registration numbers and the parking expiration time, but that he still has to walk the streets to check if cars are still parked past their deadlines. We asked about how else technology comes into his job, and he told us that he also monitors the bus lane CCTV cameras. These photograph every vehicle in the bus lane that are not cars, but he has to then screen these for actual violations of the bus lane law; for example, taxis are exempt, and he won’t issue tickets in cases of understandable violations, like when a car moves into the bus lane to allow an ambulance to pass. This led us to reflect on our assignment to search for the intersection of data and bodies, in that these were clear cases where automated data was only useful once subjected to human judgement.

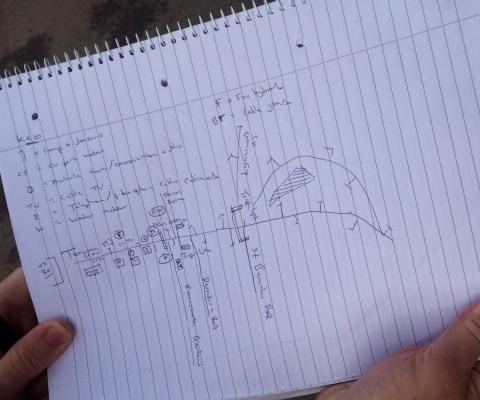

Along the way, our group mapmaker had been plotting the CCTV cameras and the cable cabinets, which are solemnly-painted, waist-high boxes on the sidewalk that house telephone and internet wires. Suddenly, we spotted a truck belonging to OpenReach, a telecommunications company – did this mean we could peek into an open cabinet undergoing repairs?

Along the way, our group mapmaker had been plotting the CCTV cameras and the cable cabinets, which are solemnly-painted, waist-high boxes on the sidewalk that house telephone and internet wires. Suddenly, we spotted a truck belonging to OpenReach, a telecommunications company – did this mean we could peek into an open cabinet undergoing repairs?

It would be fair to say we ran across the street in excitement and found a manhole cover displaced, revealing spaghetti bunches of colourful wires above a puddle of water lining the cavern. We greeted the OpenReach employee, who showed us how the connections between the phone and internet cables for the surrounding 100 homes were spliced to wires connected to underground cables. Each splice was held together with a small, clear plastic cap and a tiny dollop of grease. Seeing this brought home for us the sheer ubiquity of data infrastructure all around us and the tangible materiality of that infrastructure (plastic caps! grease!) in contrast to the ephemeral, digital spaces to which it connects us.

It would be fair to say we ran across the street in excitement and found a manhole cover displaced, revealing spaghetti bunches of colourful wires above a puddle of water lining the cavern. We greeted the OpenReach employee, who showed us how the connections between the phone and internet cables for the surrounding 100 homes were spliced to wires connected to underground cables. Each splice was held together with a small, clear plastic cap and a tiny dollop of grease. Seeing this brought home for us the sheer ubiquity of data infrastructure all around us and the tangible materiality of that infrastructure (plastic caps! grease!) in contrast to the ephemeral, digital spaces to which it connects us.

As if on cue, we spotted something glimmering on the sidewalk and approached to see it was a tiny green and purple circuit board, resplendent as a beetle. It reminded us of the aesthetic appeal of aspects of big data – visualizations as well as infrastructure – that can often be quite beautiful, perhaps disarmingly so.

As if on cue, we spotted something glimmering on the sidewalk and approached to see it was a tiny green and purple circuit board, resplendent as a beetle. It reminded us of the aesthetic appeal of aspects of big data – visualizations as well as infrastructure – that can often be quite beautiful, perhaps disarmingly so.

On our return journey, one of our group members pointed to a door number of a house and stated that, previously, that would have been just a door number. It would have been just one datapoint from which it would take a significant amount of effort to extract other datapoints. Now, however, you can be walking down the street and put the door number into the Zoopla app on your iPhone to find out how much the house is worth, what it recently sold for, and even what it looks like inside. You could Google it to see if you could find out the owners’ names, then could run those through the deep web app pipl.com. In a matter of minutes, standing on the street corner, you could be able to paint a picture – vivid even if constrained by the parameters of digital footprints – of the people living inside.

Back at Microsoft, warmed up with tea and biscuits, the groups exchanged their findings. The group that had surveyed the Cambridge Railway Station developed a theme of transparency, power, and data. They shared images of acts of transparency that they interpreted as a sleight of hand, obscuring the power imbalance between the object and subject of data collection. These included a window onto a construction site as well as a CCTV sign which, as they said, needs no explanation (although my image is blurry, so you have to look hard for the white tape).

Back at Microsoft, warmed up with tea and biscuits, the groups exchanged their findings. The group that had surveyed the Cambridge Railway Station developed a theme of transparency, power, and data. They shared images of acts of transparency that they interpreted as a sleight of hand, obscuring the power imbalance between the object and subject of data collection. These included a window onto a construction site as well as a CCTV sign which, as they said, needs no explanation (although my image is blurry, so you have to look hard for the white tape).

The group that studied the new build area spoke of data rich versus data calm environments, and how this is in the eye, or the disciplinary lens, of the observer. Their setting seemed to many of them data calm – but not to the botanist, as one member pointed out, because a wealth of fallen leaves dotted the pavement, yielding all sorts of data. We discussed how data calmness relates to privacy, in that the more contemporary residences in the neighbourhood, with their greater uniformity, revealed less about their residents; this brought up, of course, the idea that privacy corresponds to privilege.

The exercise, and the discussion that ensued, were a great foundation for our year reflecting on and building resources about the ethics of big data. Given the interdisciplinary dialogues that we aim to foster, it was useful to understand how decisions about ethics in the collection, analysis, and use of big data are framed by perceptions of what data is in the first place. They are also framed by how we decide to collect them; as Alex pointed out, there is an ethics to the design of data infrastructure, particularly with respect to inclusivity. Returning to the contested definition of big data, Alison pointed out that one way to understand it is analysis across data and databases that affords the gleaning of information that would not be attainable before – such as the portrait of the residents of a particular house. The ease with which this can be gleaned means that there is little friction to create moments where we might before have stopped to think, reflexively and critically, about the ethics of the analysis. Finally, the exercise brought up questions about the ethics of the use of the data (which both our speakers have written about in the articles linked below). The frisson my group felt about invisible surveillance was enough to highlight the impact of exclusion from access to and knowledge of data and data infrastructure. Subject of data should be able to understand the data collection process and to use the data to their own ends – a consideration that should be built into big data research design.

If you want to read more, see:

Alison’s article with colleague Nick Couldry, ‘Big Data from the Bottom Up’

Alex’s article with colleagues Siân Lindley, Tim Regan, and David Sweeney: ‘Data and Life on the Street’

Rob Kitchin’s book: The Data Revolution